Fine-tuning your model

A Summary of lecture "Supervised Learning with scikit-learn", via datacamp

- How good is your model?

- Logistic regression and the ROC curve

- Area under the ROC curve (AUC)

- Hyperparameter tuning

- Hold-out set for final evaluation

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

How good is your model?

- Classification metrics

- Measuring model performance with accuracy:

- Fraction of correctly classified samples

- Not always a useful metrics

- Measuring model performance with accuracy:

- Class imbalance example: Emails

- Spam classification

- 99% of emails are real; 1% of emails are spam

- Could build a classifier that predicts ALL emails as real

- 99% accurate!

- But horrible at actually classifying spam

- Fails at its original purpose

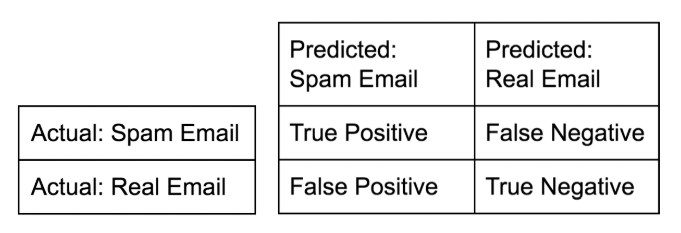

- Spam classification

-

Diagnosing classification predictions

-

Confusion matrix

-

Accuracy: $$ \dfrac{tp + tn}{tp + tn + fp + fn} $$

-

Precision (Positive Predictive Value): $$ \dfrac{tp}{tp + fp}$$

-

Recall (Sensitivity, hit rate, True Positive Rate): $$ \dfrac{tp}{tp + fn}$$

-

F1 score: Harmonic mean of precision and recall $$ 2 \cdot \dfrac{\text{precision} \cdot \text{recall}}{\text{precision} + \text{recall}} $$

-

High precision : Not many real emails predicted as spam

- High recall : Predicted most spam emails correctly

-

Metrics for classification

Accuracy is not always an informative metric. In this exercise, you will dive more deeply into evaluating the performance of binary classifiers by computing a confusion matrix and generating a classification report.

You may have noticed in the video that the classification report consisted of three rows, and an additional support column. The support gives the number of samples of the true response that lie in that class - so in the video example, the support was the number of Republicans or Democrats in the test set on which the classification report was computed. The precision, recall, and f1-score columns, then, gave the respective metrics for that particular class.

Here, you'll work with the PIMA Indians dataset obtained from the UCI Machine Learning Repository. The goal is to predict whether or not a given female patient will contract diabetes based on features such as BMI, age, and number of pregnancies. Therefore, it is a binary classification problem. A target value of 0 indicates that the patient does not have diabetes, while a value of 1 indicates that the patient does have diabetes. As in Chapters 1 and 2, the dataset has been preprocessed to deal with missing values.

df = pd.read_csv('./dataset/diabetes.csv')

df.head()

X = df.iloc[:, :-1]

y = df.iloc[:, -1]

from sklearn.metrics import classification_report, confusion_matrix

from sklearn.model_selection import train_test_split

from sklearn.neighbors import KNeighborsClassifier

# Create training and test set

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.4, random_state=42)

# Instantiate a k-NN classifier: knn

knn = KNeighborsClassifier(n_neighbors=6)

# Fit the classifier to the training data

knn.fit(X_train, y_train)

# Predict the labels of the test data: y_pred

y_pred = knn.predict(X_test)

# Generate the confusion matrix and classification report

print(confusion_matrix(y_test, y_pred))

print(classification_report(y_test, y_pred))

Logistic regression and the ROC curve

- Logistic regression for binary classification

- Logistic regression outputs probabilities

- If the probability is greater than 0.5:

- The data is labeled '1'

- If the probability is less than 0.5:

- The data is labeled '0'

- Probability thresholds

- By default, logistic regression threshold = 0.5

- Not specific to logistic regression

- k-NN classifiers also have thresholds

- ROC curves (Receiver Operating Characteristic curve)

Building a logistic regression model

Time to build your first logistic regression model! As Hugo showed in the video, scikit-learn makes it very easy to try different models, since the Train-Test-Split/Instantiate/Fit/Predict paradigm applies to all classifiers and regressors - which are known in scikit-learn as 'estimators'. You'll see this now for yourself as you train a logistic regression model on exactly the same data as in the previous exercise. Will it outperform k-NN? There's only one way to find out!

from sklearn.linear_model import LogisticRegression

# Create training and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.4, random_state=42)

# Create the classifier: logreg

logreg = LogisticRegression(max_iter=1000)

# Fit the classifier to the training data

logreg.fit(X_train, y_train)

# Predict the labels of the test set: y_pred

y_pred = logreg.predict(X_test)

# Compute and print the confusion matrix and classification report

print(confusion_matrix(y_test, y_pred))

print(classification_report(y_test, y_pred))

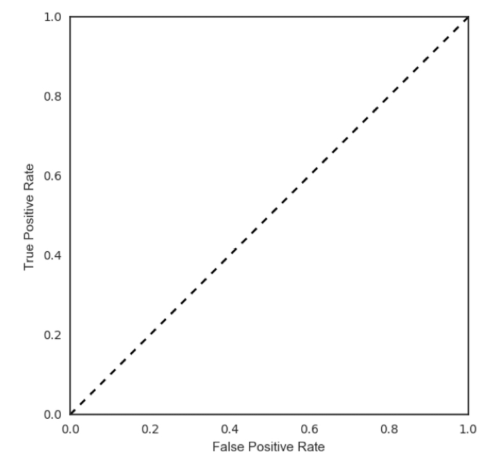

Plotting an ROC curve

Great job in the previous exercise - you now have a new addition to your toolbox of classifiers!

Classification reports and confusion matrices are great methods to quantitatively evaluate model performance, while ROC curves provide a way to visually evaluate models. As Hugo demonstrated in the video, most classifiers in scikit-learn have a .predict_proba() method which returns the probability of a given sample being in a particular class. Having built a logistic regression model, you'll now evaluate its performance by plotting an ROC curve. In doing so, you'll make use of the .predict_proba() method and become familiar with its functionality.

from sklearn.metrics import roc_curve

# Compute predicted probabilities: y_pred_prob

y_pred_prob = logreg.predict_proba(X_test)[:, 1]

# Generate ROC curve values: fpr, tpr, thresholds

fpr, tpr, thresholds = roc_curve(y_test, y_pred_prob)

# Plot ROC curve

plt.plot([0, 1], [0, 1], 'k--')

plt.plot(fpr, tpr)

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.title('ROC Curve')

Precision-recall Curve

When looking at your ROC curve, you may have noticed that the y-axis (True positive rate) is also known as recall. Indeed, in addition to the ROC curve, there are other ways to visually evaluate model performance. One such way is the precision-recall curve, which is generated by plotting the precision and recall for different thresholds. As a reminder, precision and recall are defined as: $$ \text{Precision} = \dfrac{TP}{TP + FP} \\ \text{Recall} = \dfrac{TP}{TP + FN}$$ Study the precision-recall curve. Note that here, the class is positive (1) if the individual has diabetes.

from sklearn.metrics import precision_recall_curve

precision, recall, thresholds = precision_recall_curve(y_test, y_pred_prob)

plt.plot(recall, precision)

plt.xlabel('Recall')

plt.ylabel('Precision')

plt.title('Precision / Recall plot')

AUC computation

Say you have a binary classifier that in fact is just randomly making guesses. It would be correct approximately 50% of the time, and the resulting ROC curve would be a diagonal line in which the True Positive Rate and False Positive Rate are always equal. The Area under this ROC curve would be 0.5. This is one way in which the AUC, which Hugo discussed in the video, is an informative metric to evaluate a model. If the AUC is greater than 0.5, the model is better than random guessing. Always a good sign!

In this exercise, you'll calculate AUC scores using the roc_auc_score() function from sklearn.metrics as well as by performing cross-validation on the diabetes dataset.

from sklearn.metrics import roc_auc_score

from sklearn.model_selection import cross_val_score

# Compute predicted probabilites: y_pred_prob

y_pred_prob = logreg.predict_proba(X_test)[:, 1]

# Compute and print AUC score

print("AUC: {}".format(roc_auc_score(y_test, y_pred_prob)))

# Compute cross-validated AUC scores: cv_auc

cv_auc = cross_val_score(logreg, X, y, cv=5, scoring='roc_auc')

# Print list of AUC scores

print("AUC scores computed using 5-fold cross-validation: {}".format(cv_auc))

Hyperparameter tuning

- Linear regression: Choosing parameters

- Ridge/Lasso regression: Choosing alpha

- k-Nearest Neighbors: Choosing n_neighbors

- Hyperparameters: Parameters like alpha and k

- Hyperparameters cannot be learned by fitting the model

- Choosing the correct hyperparameter

- Try a bunch of different hyperparameter values

- Fit all of them separately

- See how well each performs

- Choose the best performing one

- It is essential to use cross-validation

- Grid search cross-validation

Hyperparameter tuning with GridSearchCV

Like the alpha parameter of lasso and ridge regularization that you saw earlier, logistic regression also has a regularization parameter: $C$. $C$ controls the inverse of the regularization strength, and this is what you will tune in this exercise. A large $C$ can lead to an overfit model, while a small $C$ can lead to an underfit model.

The hyperparameter space for $C$ has been setup for you. Your job is to use GridSearchCV and logistic regression to find the optimal $C$ in this hyperparameter space.

You may be wondering why you aren't asked to split the data into training and test sets. Good observation! Here, we want you to focus on the process of setting up the hyperparameter grid and performing grid-search cross-validation. In practice, you will indeed want to hold out a portion of your data for evaluation purposes, and you will learn all about this in the next video!

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import GridSearchCV

# Setup the hyperparameter grid

c_space = np.logspace(-5, 8, 15)

param_grid = {'C':c_space}

# Instantiate a logistic regression classifier: logreg

logreg = LogisticRegression(max_iter=1000)

# Instantiate the GridSearchCV object: logreg_cv

logreg_cv = GridSearchCV(logreg, param_grid, cv=5)

# Fit it to the data

logreg_cv.fit(X, y)

# Print the tuned parameters and score

print("Tuned Logistic Regression Parameters: {}".format(logreg_cv.best_params_))

print("Best score is {}".format(logreg_cv.best_score_))

Hyperparameter tuning with RandomizedSearchCV

GridSearchCV can be computationally expensive, especially if you are searching over a large hyperparameter space and dealing with multiple hyperparameters. A solution to this is to use RandomizedSearchCV, in which not all hyperparameter values are tried out. Instead, a fixed number of hyperparameter settings is sampled from specified probability distributions. You'll practice using RandomizedSearchCV in this exercise and see how this works.

Here, you'll also be introduced to a new model: the Decision Tree. Don't worry about the specifics of how this model works. Just like k-NN, linear regression, and logistic regression, decision trees in scikit-learn have .fit() and .predict() methods that you can use in exactly the same way as before. Decision trees have many parameters that can be tuned, such as max_features, max_depth, and min_samples_leaf: This makes it an ideal use case for RandomizedSearchCV.

from scipy.stats import randint

from sklearn.tree import DecisionTreeClassifier

from sklearn.model_selection import RandomizedSearchCV

# Setup the parameters and distributions to sample from: param_dist

param_dist = {

"max_depth": [3, None],

"max_features": randint(1, 9),

"min_samples_leaf": randint(1, 9),

"criterion": ["gini", "entropy"],

}

# Instantiate a Decision Tree classifier: tree

tree = DecisionTreeClassifier()

# Instantiate the RandomizedSearchCV object: tree_cv

tree_cv = RandomizedSearchCV(tree, param_dist, cv=5)

# Fit it to the data

tree_cv.fit(X, y)

# Print the tuned parameters and score

print("Tuned Decision Tree Parameters: {}".format(tree_cv.best_params_))

print("Best score is {}".format(tree_cv.best_score_))

Hold-out set for final evaluation

- How well can the model perform on never before seen data?

- Using ALL data for cross-validation is not ideal

- Split data into training and hold-out set at the beginning

- Perform grid search cross-validation on training set

- Choose best hyperparameters and evaluate on hold-out set

Hold-out set in practice I: Classification

You will now practice evaluating a model with tuned hyperparameters on a hold-out set. The feature array and target variable array from the diabetes dataset have been pre-loaded as X and y.

In addition to $C$, logistic regression has a 'penalty' hyperparameter which specifies whether to use 'l1' or 'l2' regularization. Your job in this exercise is to create a hold-out set, tune the 'C' and 'penalty' hyperparameters of a logistic regression classifier using GridSearchCV on the training set.

from sklearn.model_selection import train_test_split, GridSearchCV

from sklearn.linear_model import LogisticRegression

# Create the hyperparameter grid

c_space = np.logspace(-5, 8, 15)

param_grid = {'C': c_space, 'penalty': ['l1', 'l2']}

# Instantiate the logistic regression classifier: logreg

logreg = LogisticRegression(max_iter=1000, solver='liblinear')

# Create train and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.4, random_state=42)

# Instantiate the GridSearchCV object: logreg_cv

logreg_cv = GridSearchCV(logreg, param_grid, cv=5)

# Fit it to the training data

logreg_cv.fit(X_train, y_train)

# Print the optimal parameters and best score

print("Tuned Logistic Regression Parameter: {}".format(logreg_cv.best_params_))

print("Tuned Logistic Regression Accuracy: {}".format(logreg_cv.best_score_))

Hold-out set in practice II: Regression

Remember lasso and ridge regression from the previous chapter? Lasso used the $L1$ penalty to regularize, while ridge used the $L2$ penalty. There is another type of regularized regression known as the elastic net. In elastic net regularization, the penalty term is a linear combination of the $L1$ and $L2$ penalties: $$ a * L1 + b * L2 $$

In scikit-learn, this term is represented by the 'l1_ratio' parameter: An 'l1_ratio' of 1 corresponds to an $L1$ penalty, and anything lower is a combination of $L1$ and $L2$.

In this exercise, you will GridSearchCV to tune the 'l1_ratio' of an elastic net model trained on the Gapminder data. As in the previous exercise, use a hold-out set to evaluate your model's performance.

df = pd.read_csv('./dataset/gm_2008_region.csv')

df.drop(labels=['Region'], axis='columns', inplace=True)

df.head()

X = df.drop('life', axis='columns').values

y = df['life'].values

from sklearn.linear_model import ElasticNet

from sklearn.metrics import mean_squared_error

from sklearn.model_selection import GridSearchCV, train_test_split

# Create train and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.4, random_state=42)

# Create the hyperparameter grid

l1_space = np.linspace(0, 1, 30)

param_grid = {'l1_ratio': l1_space}

# Instantiate the ElasticNet regressor: elastic_net

elastic_net = ElasticNet(max_iter=100000, tol=0.001)

# Setup the GridSearchCV object: gm_cv

gm_cv = GridSearchCV(elastic_net, param_grid, cv=5)

# Fit it to the training data

gm_cv.fit(X_train, y_train)

# Predict on the test set and compute metrics

y_pred = gm_cv.predict(X_test)

r2 = gm_cv.score(X_test, y_test)

mse = mean_squared_error(y_pred,y_test)

print("Tuned ElasticNet l1 ratio: {}".format(gm_cv.best_params_))

print("Tuned ElasticNet R squared: {}".format(r2))

print("Tuned ElasticNet MSE: {}".format(mse))