Convolutional Neural Networks in PyTorch

In this third chapter, we introduce convolutional neural networks, learning how to train them and how to use them to make predictions. This is the Summary of lecture "Introduction to Deep Learning with PyTorch", via datacamp.

import torch

import torch.nn as nn

import torch.nn.functional as F

import torchvision

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

plt.rcParams['figure.figsize'] = (8, 8)

Convolution operator

- Problems with the Fully-connected nn

- Do you need to consider all the relations between the features?

- Fully connected nn are big and so very computationally inefficient

- They have so many parameters, and so overfit

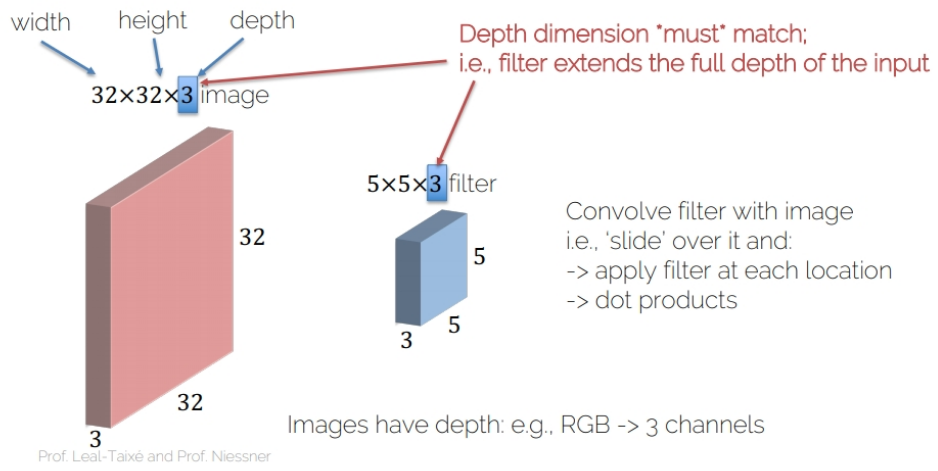

- Main idea of CNN

- Units are connected with only a few units from the previous layer

- Units share weights

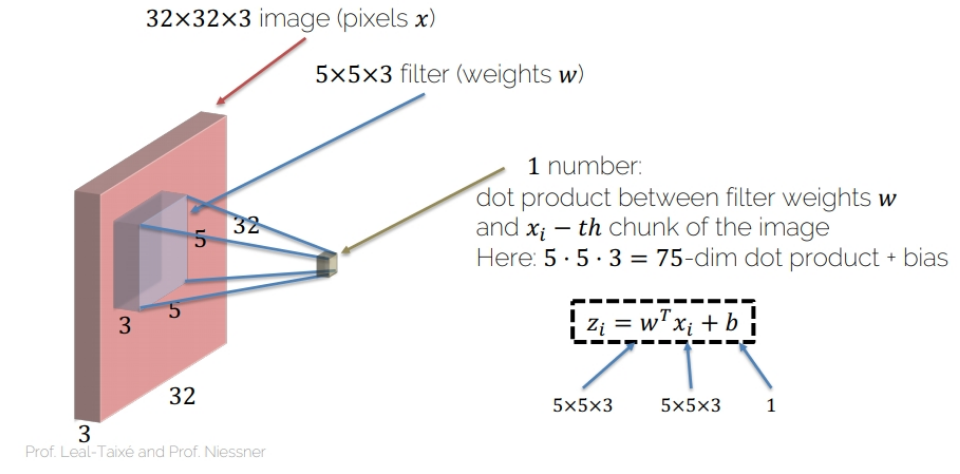

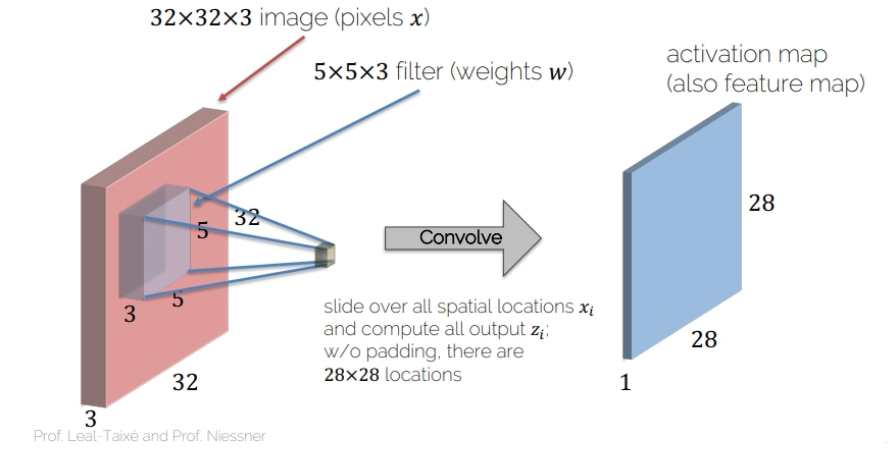

- Convolving operation

- Activation map

images = torch.rand(10, 1, 28, 28)

# Build 6 conv. filters

conv_filters = nn.Conv2d(in_channels=1, out_channels=6, kernel_size=(3, 3), stride=1, padding=1)

# Convolve the image with the filters

output_feature = conv_filters(images)

print(output_feature.shape)

Convolution operator - Functional way

While I and most of PyTorch practitioners love the torch.nn package (OOP way), other practitioners prefer building neural network models in a more functional way, using torch.nn.functional. More importantly, it is possible to mix the concepts and use both libraries at the same time (we have already done it in the previous chapter). You are going to build the same neural network you built in the previous exercise, but this time using the functional way.

images = torch.rand(10, 1, 28, 28)

# Create 6 filters

filters = torch.rand(6, 1, 3, 3)

# Convolve the image with the filters

output_feature = F.conv2d(images, filters, stride=1, padding=1)

print(output_feature.shape)

im = torch.rand(1, 1, 6, 6)

im

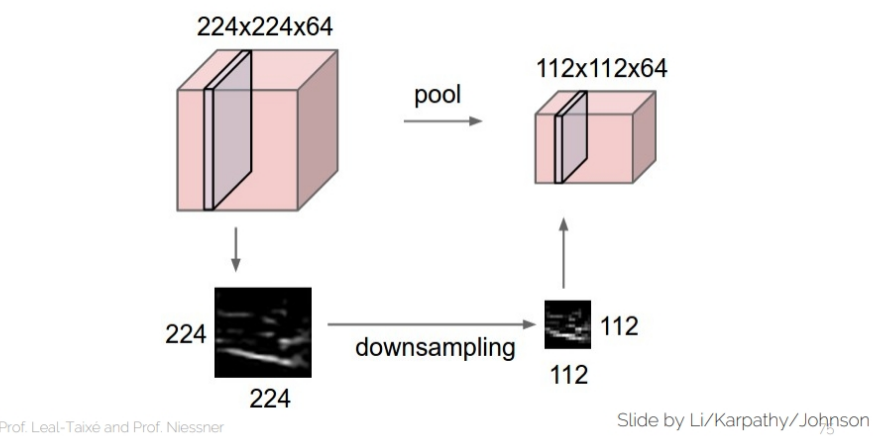

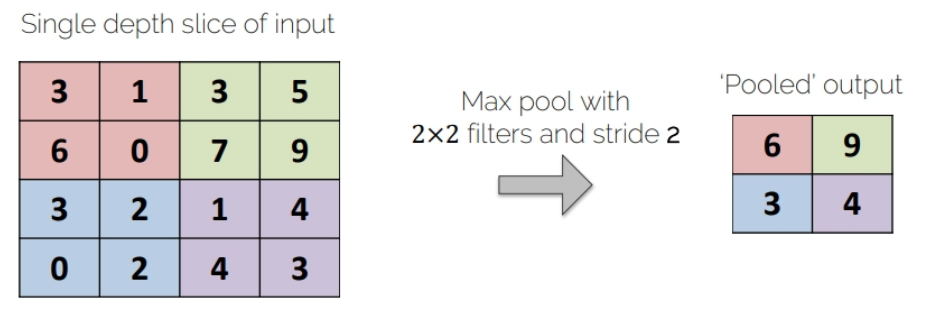

max_pooling = nn.MaxPool2d(2)

# Apply the pooling operator

output_feature = max_pooling(im)

# Use pooling operator in the image

output_feature_F = F.max_pool2d(im, 2)

# Print the results of both cases

print(output_feature)

print(output_feature_F)

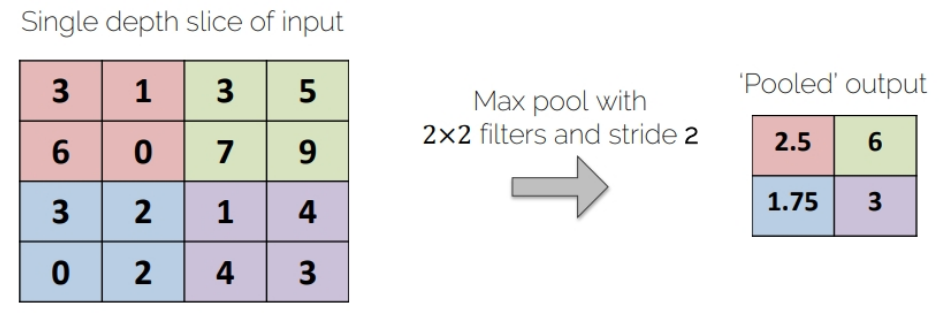

avg_pooling = nn.AvgPool2d(2)

# Apply the pooling operator

output_feature = avg_pooling(im)

# Use pooling operator in the image

output_feature_F = F.avg_pool2d(im, 2)

# Print the results of both cases

print(output_feature)

print(output_feature_F)

Convolutional Neural Networks

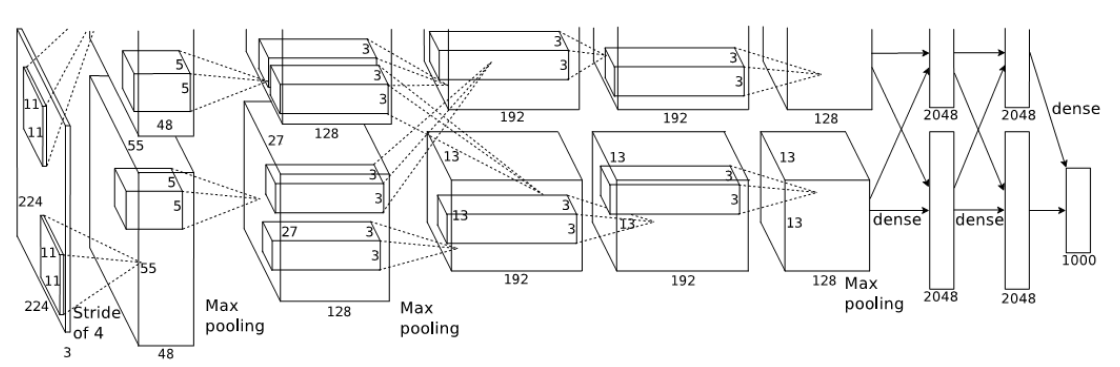

- AlexNet

-

Implementation in pytorch

class AlexNet(nn.Module): def __init__(self, num_classes=1000): super(AlexNet, self).__init__() self.conv1 = nn.Conv2d(3, 64, kernel_size=11, stride=4, padding=2) self.relu = nn.ReLU(inplace=True) self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2) self.conv2 = nn.Conv2d(64, 192, kernel_size=5, padding=2) self.conv3 = nn.Conv2d(192, 384, kernel_size=3, padding=1) self.conv4 = nn.Conv2d(384, 256, kernel_size=3, padding=1) self.conv5 = nn.Conv2d(256, 256, kernel_size=3, padding=1) self.avgpool = nn.AdaptiveAvgPool2d((6, 6)) self.fc1 = nn.Linear(256 * 6 * 6, 4096) self.fc2 = nn.Linear(4096, 4096) self.fc3 = nn.Linear(4096, num_classes) def forward(self, x): x = self.relu(self.conv1(x)) x = self.maxpool(x) x = self.relu(self.conv2(x)) x = self.maxpool(x) x = self.relu(self.conv3(x)) x = self.relu(self.conv4(x)) x = self.relu(self.conv5(x)) x = self.maxpool(x) x = self.avgpool(x) x = x.view(x.size(0), 256 * 6 * 6) x = self.relu(self.fc1(x)) x = self.relu(self.fc2(x)) return self.fc3(x)

Your first CNN - init method

You are going to build your first convolutional neural network. You're going to use the MNIST dataset as the dataset, which is made of handwritten digits from 0 to 9. The convolutional neural network is going to have 2 convolutional layers, each followed by a ReLU nonlinearity, and a fully connected layer. Remember that each pooling layer halves both the height and the width of the image, so by using 2 pooling layers, the height and width are 1/4 of the original sizes. MNIST images have shape (1, 28, 28)

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

# Instantiate two convolutional layers

self.conv1 = nn.Conv2d(in_channels=1, out_channels=5, kernel_size=3, padding=1)

self.conv2 = nn.Conv2d(in_channels=5, out_channels=10, kernel_size=3, padding=1)

# Instantiate the ReLU nonlinearity

self.relu = nn.ReLU(inplace=True)

# Instantiate a max pooling layer

self.pool = nn.MaxPool2d(kernel_size=2, stride=2)

# Instantiate a fully connected layer

self.fc = nn.Linear(49 * 10, 10)

def forward(self, x):

# Apply conv followed by relu, then in next line pool

x = self.relu(self.conv1(x))

x = self.pool(x)

# Apply conv followed by relu, then in next line pool

x = self.relu(self.conv2(x))

x = self.pool(x)

# Prepare the image for the fully connected layer

x = x.view(-1, 7 * 7 * 10)

# Apply the fully connected layer and return the result

return self.fc(x)

Training CNNs

Similarly to what you did in Chapter 2, you are going to train a neural network. This time however, you will train the CNN you built in the previous lesson, instead of a fully connected network. The packages you need have been imported for you and the network (called net) instantiated. The cross-entropy loss function (called criterion) and the Adam optimizer (called optimizer) are also available. We have subsampled the training set so that the training goes faster, and you are going to use a single epoch.

import torchvision.transforms as transforms

# Transform the data to torch tensors and normalize it

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307), (0.3081))

])

# Preparing the training and test set

trainset = torchvision.datasets.MNIST('mnist', train=True, transform=transform)

testset = torchvision.datasets.MNIST('mnist', train=False, transform=transform)

# Prepare loader

train_loader = torch.utils.data.DataLoader(trainset, batch_size=1, shuffle=True, num_workers=0)

test_loader = torch.utils.data.DataLoader(testset, batch_size=1, shuffle=False, num_workers=0)

import torch.optim as optim

net = Net()

optimizer = optim.Adam(net.parameters(), lr=3e-4)

criterion = nn.CrossEntropyLoss()

for i, data in enumerate(train_loader, 0):

inputs, labels = data

optimizer.zero_grad()

# Compute the forward pass

outputs = net(inputs)

# Compute the loss function

loss = criterion(outputs, labels)

# Compute the gradients

loss.backward()

# Update the weights

optimizer.step()

Using CNNs to make predictions

Building and training neural networks is a very exciting job (trust me, I do it every day)! However, the main utility of neural networks is to make predictions. This is the entire reason why the field of deep learning has bloomed in the last few years, as neural networks predictions are extremely accurate. On this exercise, we are going to use the convolutional neural network you already trained in order to make predictions on the MNIST dataset.

Remember that torch.max() takes two arguments: -output.data - the tensor which contains the data.

Either 1 to do argmax or 0 to do max.

net.eval()

# Iterate over the data in the test_loader

for i, data in enumerate(test_loader):

# Get the image and label from data

image, label = data

# Make a forward pass in the net with your image

output = net(image)

# Argmax the results of the net

_, predicted = torch.max(output.data, 1)

if predicted == label:

print("Yipes, your net made the right prediction " + str(predicted))

else:

print("Your net prediction was " + str(predicted) + ", but the correct label is: " + str(label))

if i > 10:

break