Summary Statistics with Python

Summary statistics gives you the tools you need to boil down massive datasets to reveal the highlights. In this chapter, you'll explore summary statistics including mean, median, and standard deviation, and learn how to accurately interpret them. You'll also develop your critical thinking skills, allowing you to choose the best summary statistics for your data. This is the Summary of lecture "Introduction to Statistics in Python", via datacamp.

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

plt.rcParams['figure.figsize'] = (10, 8)

What is statistics?

- Statistics

- the practice and study of collecting and analyzing data

- Summary Statistic - A fact about or summary of some data

- Example

- How likely is someone to purchase a product? Are people more likely to purchase it if they can use a different payment system?

- How many occupants will your hotel have? How can you optimize occupancy?

- How many sizes of jeans need to be manufactured so they can fit 95% of the population? Should the same number of each size be produced?

- A/B tests: Which ad is more effective in getting people to purchase product?

- Type of statistics

- Descriptive statistics

- Describe and summarize data

- Inferential statistics

- Use a sample of data to make inferences about a larger population

- Descriptive statistics

- Type of data

- Numeric (Quantitative)

- Continuous (Measured)

- Discrete (Counted)

- Categorical (Qualitative)

- Nomial (Unordered)

- Ordinal (Ordered)

- Numeric (Quantitative)

Mean and median

In this chapter, you'll be working with the 2018 Food Carbon Footprint Index from nu3. The food_consumption dataset contains information about the kilograms of food consumed per person per year in each country in each food category (consumption) as well as information about the carbon footprint of that food category (co2_emissions) measured in kilograms of carbon dioxide, or $CO_2$, per person per year in each country.

In this exercise, you'll compute measures of center to compare food consumption in the US and Belgium.

food_consumption = pd.read_csv('./dataset/food_consumption.csv', index_col=0)

food_consumption.head()

be_consumption = food_consumption[food_consumption['country'] == 'Belgium']

# Filter for USA

usa_consumption = food_consumption[food_consumption['country'] == 'USA']

# Calculate mean and median consumption in Belgium

print(np.mean(be_consumption['consumption']))

print(np.median(be_consumption['consumption']))

# Calculate mean and median consumption of USA

print(np.mean(usa_consumption['consumption']))

print(np.median(usa_consumption['consumption']))

or

be_and_usa = food_consumption[(food_consumption['country'] == 'Belgium') |

(food_consumption['country'] == 'USA')]

# Group by country, select consumption column, and compute mean and median

print(be_and_usa.groupby('country')['consumption'].agg([np.mean, np.median]))

Mean vs. median

In the video, you learned that the mean is the sum of all the data points divided by the total number of data points, and the median is the middle value of the dataset where 50% of the data is less than the median, and 50% of the data is greater than the median. In this exercise, you'll compare these two measures of center.

rice_consumption = food_consumption[food_consumption['food_category'] == 'rice']

# Histogram of co2_emission for rice and show plot

rice_consumption['co2_emission'].hist();

# Calculate mean and median of co2_emission with .agg()

print(rice_consumption['co2_emission'].agg([np.mean, np.median]))

Measures of spread

- Variance

- Average distance from each data point to the data's mean

- Standard deviation

- Mean absolute deviation

- Standard deviation vs. mean absolute deviation

- Standard deviation squares distances, penalizing longer distances more than shorter ones

- Mean absolute deviation penalizes each distance equally

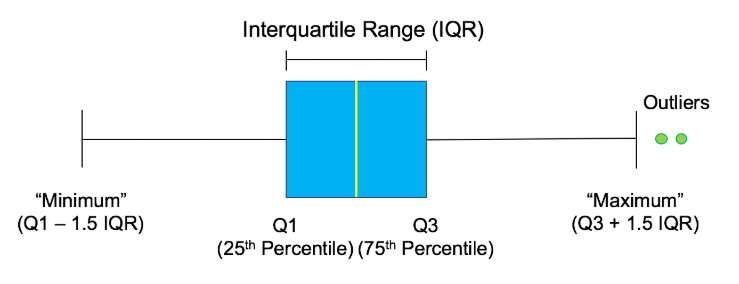

- Quantiles (Or percentiles)

- Interquartile range (IQR)

- Height of the box in a boxplot

- Outliers

- Data point that is substantially different from the others

Quartiles, quantiles, and quintiles

Quantiles are a great way of summarizing numerical data since they can be used to measure center and spread, as well as to get a sense of where a data point stands in relation to the rest of the data set. For example, you might want to give a discount to the 10% most active users on a website.

In this exercise, you'll calculate quartiles, quintiles, and deciles, which split up a dataset into 4, 5, and 10 pieces, respectively.

print(np.quantile(food_consumption['co2_emission'], np.linspace(0, 1, 5)))

# Calculate the quintiles of co2_emission

print(np.quantile(food_consumption['co2_emission'], np.linspace(0, 1, 6)))

# Calculate the deciles of co2_emission

print(np.quantile(food_consumption['co2_emission'], np.linspace(0, 1, 10)))

Variance and standard deviation

Variance and standard deviation are two of the most common ways to measure the spread of a variable, and you'll practice calculating these in this exercise. Spread is important since it can help inform expectations. For example, if a salesperson sells a mean of 20 products a day, but has a standard deviation of 10 products, there will probably be days where they sell 40 products, but also days where they only sell one or two. Information like this is important, especially when making predictions.

print(food_consumption.groupby('food_category')['co2_emission'].agg([np.var, np.std]))

# Create histogram of co2_emission for food_category 'beef'

food_consumption[food_consumption['food_category'] == 'beef']['co2_emission'].hist();

food_consumption[food_consumption['food_category'] == 'eggs']['co2_emission'].hist();

Finding outliers using IQR

Outliers can have big effects on statistics like mean, as well as statistics that rely on the mean, such as variance and standard deviation. Interquartile range, or IQR, is another way of measuring spread that's less influenced by outliers. IQR is also often used to find outliers. If a value is less than $Q1−1.5×IQR$ or greater than $Q3+1.5×IQR$, it's considered an outlier.

In this exercise, you'll calculate IQR and use it to find some outliers.

In this exercise, you'll calculate IQR and use it to find some outliers.

emissions_by_country = food_consumption.groupby('country')['co2_emission'].sum()

print(emissions_by_country)

q1 = np.quantile(emissions_by_country, 0.25)

q3 = np.quantile(emissions_by_country, 0.75)

iqr = q3 - q1

# Calculate the lower and upper cutoffs for outliers

lower = q1 - 1.5 * iqr

upper = q3 + 1.5 * iqr

# Subset emissions_by_country to find outliers

outliers = emissions_by_country[(emissions_by_country > upper) | (emissions_by_country < lower)]

print(outliers)